GitHub Weekly Top 10 Trends (10 Aug 2025)

This week’s GitHub trending projects showcase the incredible diversity and innovation happening across the open-source community. From powerful AI coding assistants and lightweight large language model inference engines, to intuitive terminal tools, robust file sharing servers, and cutting-edge graphics libraries for embedded devices, developers are pushing the boundaries of what’s possible. Whether you’re interested in making your workflow more efficient, building smarter applications, or exploring new ways to interact with technology, these projects offer practical solutions and inspiration for every developer. Dive in to discover the tools and ideas shaping the future of software development!

This week’s GitHub trending projects showcase the incredible diversity and innovation happening across the open-source community. From powerful AI coding assistants and lightweight large language model inference engines, to intuitive terminal tools, robust file sharing servers, and cutting-edge graphics libraries for embedded devices, developers are pushing the boundaries of what’s possible. Whether you’re interested in making your workflow more efficient, building smarter applications, or exploring new ways to interact with technology, these projects offer practical solutions and inspiration for every developer. Dive in to discover the tools and ideas shaping the future of software development!

For past weekly trending, please view Weekly Tags.

Dyad

Dyad is an AI-powered development environment designed to streamline application creation through conversational programming. Built on Electron, Dyad offers a sophisticated desktop application where users interact with AI via a chat interface to create, modify, and deploy applications. The platform manages file system operations, version control, and live preview functionality automatically, allowing developers to focus on building their projects. Dyad’s architecture features a clear separation between the main and renderer processes, connected through a secure IPC layer. The main process is responsible for system operations, file management, and external service integrations, while the renderer process handles the React-based user interface and user interactions. This design ensures both robust functionality and a seamless user experience.

Dyad is an AI-powered development environment designed to streamline application creation through conversational programming. Built on Electron, Dyad offers a sophisticated desktop application where users interact with AI via a chat interface to create, modify, and deploy applications. The platform manages file system operations, version control, and live preview functionality automatically, allowing developers to focus on building their projects. Dyad’s architecture features a clear separation between the main and renderer processes, connected through a secure IPC layer. The main process is responsible for system operations, file management, and external service integrations, while the renderer process handles the React-based user interface and user interactions. This design ensures both robust functionality and a seamless user experience.

MCP Curriculum for Beginners

MCP Curriculum for Beginners is an educational project designed to help developers learn and implement the Model Context Protocol (MCP). This resource offers comprehensive tutorials, practical examples, and best practices for building MCP servers and clients in various programming languages. Whether you are new to MCP or looking to deepen your understanding, the repository provides step-by-step guidance and hands-on materials to support your learning journey.

MCP Curriculum for Beginners is an educational project designed to help developers learn and implement the Model Context Protocol (MCP). This resource offers comprehensive tutorials, practical examples, and best practices for building MCP servers and clients in various programming languages. Whether you are new to MCP or looking to deepen your understanding, the repository provides step-by-step guidance and hands-on materials to support your learning journey.

Ollama

Ollama is a local large language model (LLM) server that allows you to run powerful AI models directly on your own hardware, ensuring your data stays private and never leaves your machine. It offers a comprehensive solution for local AI deployment, featuring command-line tools, a REST API, and OpenAI-compatible endpoints. With Ollama, you can easily run models like Llama, Gemma, and Mistral, manage the entire model lifecycle—including pulling, creating, versioning, and distributing models—and interact with the server through multiple interfaces such as the CLI, REST API, and OpenAI API. Ollama is optimized for performance, supporting GPU acceleration via CUDA, ROCm, and Metal, with automatic resource management to make the most of your hardware.

Ollama is a local large language model (LLM) server that allows you to run powerful AI models directly on your own hardware, ensuring your data stays private and never leaves your machine. It offers a comprehensive solution for local AI deployment, featuring command-line tools, a REST API, and OpenAI-compatible endpoints. With Ollama, you can easily run models like Llama, Gemma, and Mistral, manage the entire model lifecycle—including pulling, creating, versioning, and distributing models—and interact with the server through multiple interfaces such as the CLI, REST API, and OpenAI API. Ollama is optimized for performance, supporting GPU acceleration via CUDA, ROCm, and Metal, with automatic resource management to make the most of your hardware.

Actual Budget

Actual Budget is a personal finance tool designed with a local-first architecture, ensuring that all data and processing occur on the user’s device by default for maximum privacy. It offers a range of features to help users manage their finances effectively, including envelope-based budgeting, transaction management and categorization, account synchronization with financial institutions, and rule-based automation. Additionally, Actual Budget supports optional cross-device synchronization and provides robust reporting and visualization tools to give users clear insights into their financial health.

Actual Budget is a personal finance tool designed with a local-first architecture, ensuring that all data and processing occur on the user’s device by default for maximum privacy. It offers a range of features to help users manage their finances effectively, including envelope-based budgeting, transaction management and categorization, account synchronization with financial institutions, and rule-based automation. Additionally, Actual Budget supports optional cross-device synchronization and provides robust reporting and visualization tools to give users clear insights into their financial health.

OpenAI Codex CLI

OpenAI Codex is a local coding agent system designed to assist users with software development tasks by integrating with AI model providers. The repository enables users to execute commands, edit files, and perform coding operations within a secure, sandboxed environment to ensure safety. Codex is implemented as a multi-language, multi-interface system, featuring a shared core protocol that facilitates AI interactions and command execution. This architecture allows Codex to provide flexible and secure AI-powered coding assistance across different programming languages and user interfaces.

OpenAI Codex is a local coding agent system designed to assist users with software development tasks by integrating with AI model providers. The repository enables users to execute commands, edit files, and perform coding operations within a secure, sandboxed environment to ensure safety. Codex is implemented as a multi-language, multi-interface system, featuring a shared core protocol that facilitates AI interactions and command execution. This architecture allows Codex to provide flexible and secure AI-powered coding assistance across different programming languages and user interfaces.

OpenAI Cookbook

OpenAI Cookbook is a comprehensive repository offering practical examples and detailed guides for using OpenAI’s APIs and services. Serving as both a community resource and a technical documentation hub, the Cookbook provides developers with real-world implementations and best practices across a wide range of domains, including function calling, embeddings, multimodal processing, fine-tuning, and advanced AI workflows. It is an essential resource for anyone looking to effectively leverage OpenAI technologies in their projects.

OpenAI Cookbook is a comprehensive repository offering practical examples and detailed guides for using OpenAI’s APIs and services. Serving as both a community resource and a technical documentation hub, the Cookbook provides developers with real-world implementations and best practices across a wide range of domains, including function calling, embeddings, multimodal processing, fine-tuning, and advanced AI workflows. It is an essential resource for anyone looking to effectively leverage OpenAI technologies in their projects.

llama.cpp

llama.cpp is a high-performance, plain C/C++ implementation designed to enable efficient Large Language Model (LLM) inference with minimal setup across diverse hardware platforms. The repository focuses on providing a lightweight solution with minimal dependencies, making it easy to build and deploy. llama.cpp supports cross-platform operation on CPUs, GPUs, and specialized hardware accelerators, and standardizes model storage using the GGUF format. Its architecture includes advanced performance optimizations such as quantization and hardware-specific kernels, ensuring state-of-the-art speed and efficiency. Additionally, llama.cpp is developer-friendly, offering both command-line tools and programmatic APIs for flexible integration into various workflows.

llama.cpp is a high-performance, plain C/C++ implementation designed to enable efficient Large Language Model (LLM) inference with minimal setup across diverse hardware platforms. The repository focuses on providing a lightweight solution with minimal dependencies, making it easy to build and deploy. llama.cpp supports cross-platform operation on CPUs, GPUs, and specialized hardware accelerators, and standardizes model storage using the GGUF format. Its architecture includes advanced performance optimizations such as quantization and hardware-specific kernels, ensuring state-of-the-art speed and efficiency. Additionally, llama.cpp is developer-friendly, offering both command-line tools and programmatic APIs for flexible integration into various workflows.

copyparty

copyparty is a versatile, self-contained file server that transforms nearly any device into a web-based file sharing platform with support for resumable uploads and downloads. Designed for maximum portability and minimal dependencies, copyparty requires only Python (2 or 3) to run, with all additional features being optional. It supports a wide range of network protocols—including HTTP/HTTPS, WebDAV, FTP/FTPS, TFTP, and SMB/CIFS—ensuring compatibility with virtually any client or application. The primary interface is a feature-rich web application offering file browsing, uploading, media playback, search, and administrative tools, making copyparty a powerful solution for easy and flexible file sharing.

copyparty is a versatile, self-contained file server that transforms nearly any device into a web-based file sharing platform with support for resumable uploads and downloads. Designed for maximum portability and minimal dependencies, copyparty requires only Python (2 or 3) to run, with all additional features being optional. It supports a wide range of network protocols—including HTTP/HTTPS, WebDAV, FTP/FTPS, TFTP, and SMB/CIFS—ensuring compatibility with virtually any client or application. The primary interface is a feature-rich web application offering file browsing, uploading, media playback, search, and administrative tools, making copyparty a powerful solution for easy and flexible file sharing.

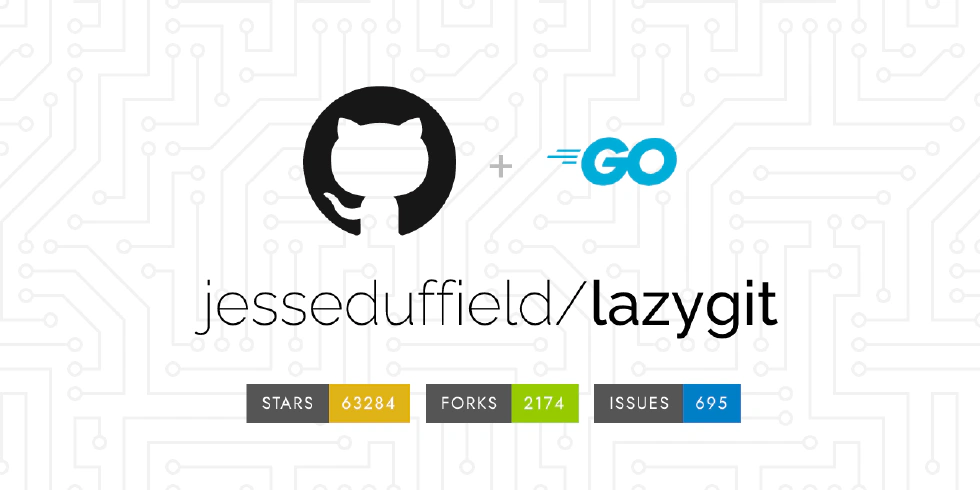

Lazygit

Lazygit is a terminal-based Git client designed to simplify version control by offering an intuitive, panel-based interface for managing Git repositories. It enables users to perform common Git operations—such as staging files, creating commits, interactive rebasing, cherry-picking, and branch management—using convenient keyboard shortcuts, eliminating the need to remember complex command-line syntax. Lazygit provides a clear, multi-panel view of repository state, making Git workflows faster and more accessible directly from the terminal.

Lazygit is a terminal-based Git client designed to simplify version control by offering an intuitive, panel-based interface for managing Git repositories. It enables users to perform common Git operations—such as staging files, creating commits, interactive rebasing, cherry-picking, and branch management—using convenient keyboard shortcuts, eliminating the need to remember complex command-line syntax. Lazygit provides a clear, multi-panel view of repository state, making Git workflows faster and more accessible directly from the terminal.

LVGL

LVGL (Light and Versatile Graphics Library) is the leading free and open-source embedded graphics library for building modern, visually appealing user interfaces on any microcontroller (MCU), microprocessor (MPU), and display type. LVGL offers a comprehensive graphics framework, from basic drawing primitives to advanced widget systems, allowing developers to create sophisticated GUIs with minimal resource requirements. Fully portable and lightweight, LVGL is implemented as a C library (C++ compatible) with no external dependencies, requiring as little as 32kB RAM and 128kB Flash. It features over 30 built-in widgets, a powerful style system, and web-inspired layouts, supporting a wide range of display technologies including monochrome, ePaper, OLED, and TFT. LVGL is widely adopted by industry leaders such as Arm, STM32, NXP, Espressif, Arduino, and more, and is used in thousands of projects from simple IoT devices to complex industrial systems.

LVGL (Light and Versatile Graphics Library) is the leading free and open-source embedded graphics library for building modern, visually appealing user interfaces on any microcontroller (MCU), microprocessor (MPU), and display type. LVGL offers a comprehensive graphics framework, from basic drawing primitives to advanced widget systems, allowing developers to create sophisticated GUIs with minimal resource requirements. Fully portable and lightweight, LVGL is implemented as a C library (C++ compatible) with no external dependencies, requiring as little as 32kB RAM and 128kB Flash. It features over 30 built-in widgets, a powerful style system, and web-inspired layouts, supporting a wide range of display technologies including monochrome, ePaper, OLED, and TFT. LVGL is widely adopted by industry leaders such as Arm, STM32, NXP, Espressif, Arduino, and more, and is used in thousands of projects from simple IoT devices to complex industrial systems.